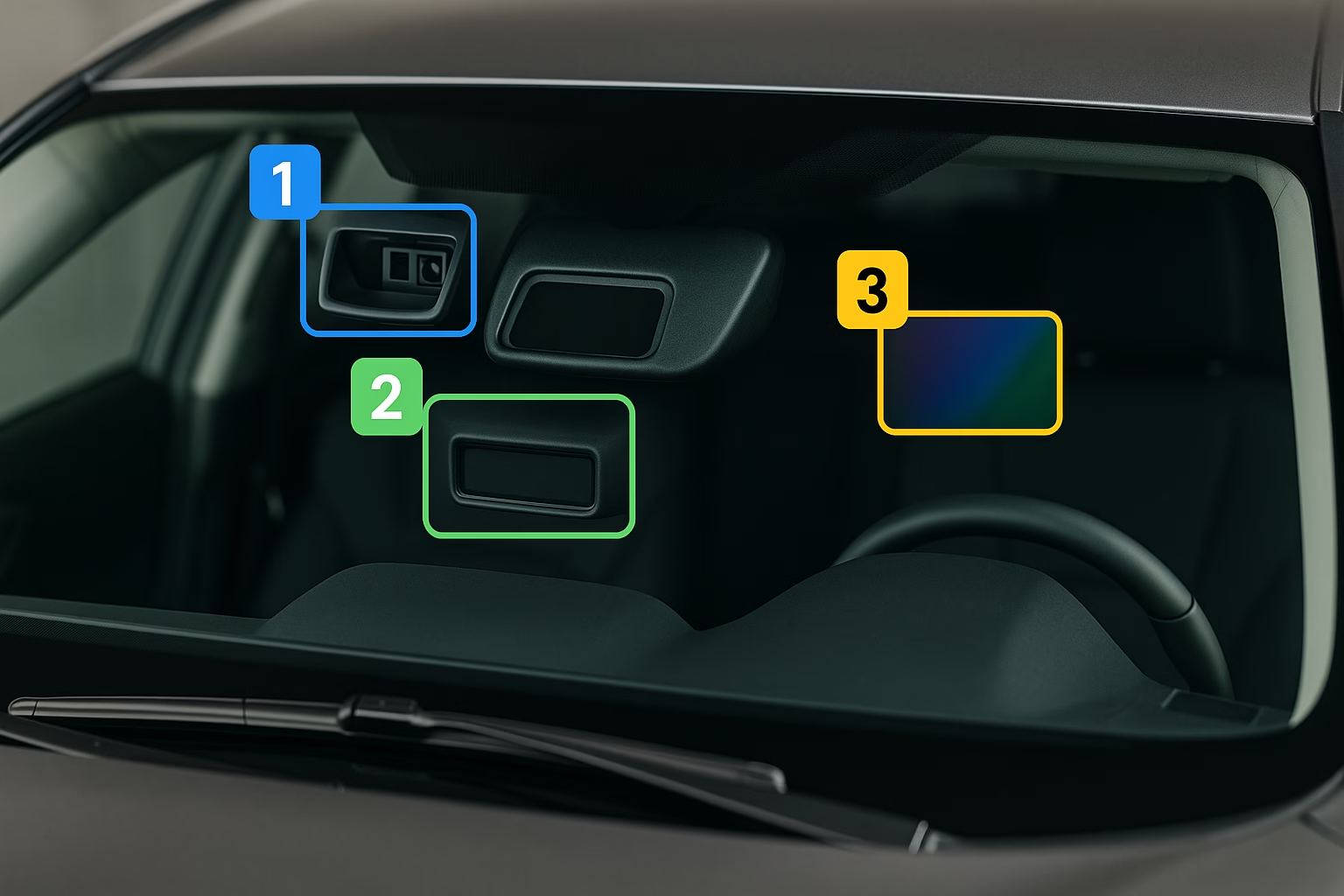

Sensor fusion lies at the heart of advanced driver assistance systems (ADAS) and automated driving. Instead of relying on a single type of detector, modern vehicles combine data from cameras, radar, lidar and ultrasonic sensors to build a more complete view of the environment. Each technology has strengths and weaknesses: cameras offer high-resolution images for lane markings and traffic lights but struggle in low light; radar sees through fog and rain and measures distance and speed precisely but offers coarse resolution; lidar provides centimetre-level 3D maps but is expensive; ultrasonic sensors help with parking at close range. By fusing these inputs, the vehicle can draw on the best aspects of each to produce a robust 360° picture.

Why multiple sensors are necessary

No single sensor can see everything. A forward camera might miss a fast-approaching motorcycle in heavy rain, while radar alone may not detect a lane-marking change. According to a CarADAS overview of sensor fusion, combining data from cameras, radar, lidar and ultrasonic sensors provides redundancy so that if one sensor fails or is obstructed, others compensate . The goal is not to pick one sensor over another but to merge their complementary data to support features like collision avoidance, adaptive cruise control and lane departure warning . This redundancy is also a safety requirement for higher levels of automation, which must reliably detect vehicles, pedestrians and obstacles in all weather conditions.

How sensor fusion works

Sensor fusion algorithms collect raw signals from different sensors and convert them into actionable information. CarADAS describes a four-step process: detection, segmentation, classification and monitoring . Detection filters raw data to find potential objects; segmentation isolates the object from its background; classification uses machine learning to determine whether the object is a vehicle, pedestrian, cyclist or other obstacle; and monitoring tracks the object’s trajectory over time. Fusing radar and camera data improves classification, because the camera provides shape and colour while radar provides distance and speed. The combined output feeds into driver assistance functions that warn the driver or take corrective actions.

For example, blind-spot monitoring may rely on radar to detect vehicles in adjacent lanes while a camera confirms lane markings. Rear cross-traffic alert systems use radar and ultrasonic sensors to watch for approaching vehicles when reversing. Automatic emergency braking integrates forward-looking camera and radar inputs to detect stationary or slow-moving vehicles and apply the brakes. Adaptive cruise control combines long-range radar to measure the speed of vehicles ahead with cameras that read speed-limit signs and lane lines. Without sensor fusion, each system would need to overcompensate for its weaknesses or provide more conservative warnings.

Benefits for technicians and drivers

For technicians, understanding sensor fusion is crucial because calibration errors or sensor degradation can degrade the entire system. When cameras are replaced during windscreen replacement or radar units are realigned after bumper repairs, the system must be recalibrated to ensure that fused data aligns correctly. Misaligned sensors can cause false warnings or missed detections, which could lead to unnecessary braking or failure to detect hazards. CarADAS notes that sensor fusion provides redundancy so that one sensor can back up another when conditions change . That redundancy only works if each sensor is providing accurate data; technicians must therefore inspect mounting angles, clean lenses and ensure that static or dynamic calibration procedures have been performed.

Drivers benefit because fused systems tend to be more reliable than single-sensor designs. A radar could mistake a metal sign for a vehicle, but the camera recognises it as a stationary object; the fusion algorithm prevents an unnecessary brake application. When a camera is blinded by glare, radar continues to track the vehicle ahead. As vehicles move towards hands-free Level 2 plus automated features, sensor fusion will underpin functions like traffic-jam assist, automatic lane changing and even low-speed self-parking. The system still alerts the driver to remain attentive, as driver assistance technologies are designed to warn you or take action to avoid a crash but do not replace the driver .

Challenges and best practices

Sensor fusion introduces technical challenges. The vehicle’s electronic control units (ECUs) must synchronise data streams with different refresh rates and coordinate dozens of messages per second. Environmental factors like heavy rain, snow or mud can block radar or camera sensors and confuse the fusion algorithm. Software updates and hardware upgrades can change the way data is processed, meaning that calibration procedures must be updated accordingly. Workshops need to maintain clean, temperature-controlled environments for static calibration and find safe road segments for dynamic calibration, as inaccurate calibration will propagate through the fusion layer.

Technicians should follow these best practices when servicing vehicles with sensor fusion:

- Identify all equipped sensors. Check the service manual or scan tool to determine which ADAS features are installed and what sensors they use.

- Inspect sensor mounts and clear obstructions. Clean camera lenses and radar covers; remove ice, dirt, or aftermarket accessories that block the sensor field of view.

- Perform wheel alignment and suspension checks. Calibration often begins with an alignment because slight changes in toe or camber can alter sensor aim. Modern Tire Dealer explains that if the wheels aren’t straight, neither is the ADAS; slight misalignment near the wheel becomes a large error at 100 metres .

- Follow manufacturer calibration procedures. Use the correct targets, radar reflectors or scan tools for static and dynamic calibration. CarADAS notes that static calibration uses targets and stands in a controlled environment, while dynamic calibration is performed on the road at specific speeds and conditions . Combining sensors during calibration helps align the fusion algorithms.

- Verify operation with test drives. After calibration, road-test the vehicle to ensure that warnings and interventions occur at the right time.

Looking ahead: AI, V2X and increased complexity

The future of sensor fusion is tied to advances in artificial intelligence and vehicle-to-everything (V2X) communication. CarADAS forecasts that improved AI will allow systems to better interpret complex scenes and adapt to sensor failures or poor weather, while V2X will enable vehicles to share data about hazards beyond line of sight . As the number of sensors grows and data rates increase, vehicles will require more powerful processors and high-speed networks to handle fusion tasks. Standardisation of sensor interfaces and calibration procedures will also become important so that independent workshops can service mixed fleets.

Hiran Alwis is an automotive lecturer and ADAS specialist with over 15 years of experience in diagnostics, advanced safety systems, and technical training. He founded ADAS Project to help everyday drivers and workshop technicians understand and safely use advanced driver assistance systems.